Should we create artificial intelligence superior to our own?

This is the first in a series of posts where readers are welcome to comment or post their ideas regarding pivotal questions of the future.

The term 'Artificial Intelligence' (AI) was coined in 1956 by John McCarthy at MIT.

Numerous well known people such as Stephen Hawking and Elon Musk have expressed concern about the ramifications of its enormous potential. Hawking claims that "the development of full artificial intelligence could spell the end of the human race" whilst Musk describes it as "our biggest existential threat".

Artificial Intelligence is very real. Take Siri, for example, the digital personal assistant on any iOS device since the iPhone 4S. Siri adapts to every user's language and can interact with numerous applications within your phone, connect to Apple's servers to collect information and correlations, and decide on results that work best for you.

Not dangerous enough? Take the Starfish Assassin, an AI powered agent of death that carries enough poison to wipe out more than 200 starfish in one 4-8 hour mission. Yes, that's a thing.

Employing sonar, multiple cameras and thrusters, it navigates the Great Barrier Reef.

Programmed to identify specific starfish that are a specific threat to the reef due to an explosion in population, it injects 10 ml of poisonous bile, which, according to Scientific American, "effectively digests the animal from the inside".

One of the robotics researchers at the Queensland University of Technology in Australia adds "It's now so good it even ignores our 3D printed decoys and targets only live starfish." It knows.

Neil deGrasse Tyson is not, however, concerned. "I have no fear of Artificial Intelligence" he says. In fact, at this stage, artificial intelligence is almost exclusively benign to develop.

Indeed, if regulated and controlled, the potential of AI is quite literally beyond anything we can imagine. "The right emphasis on AI research is on AI safety." Elon Musk responded to critics.

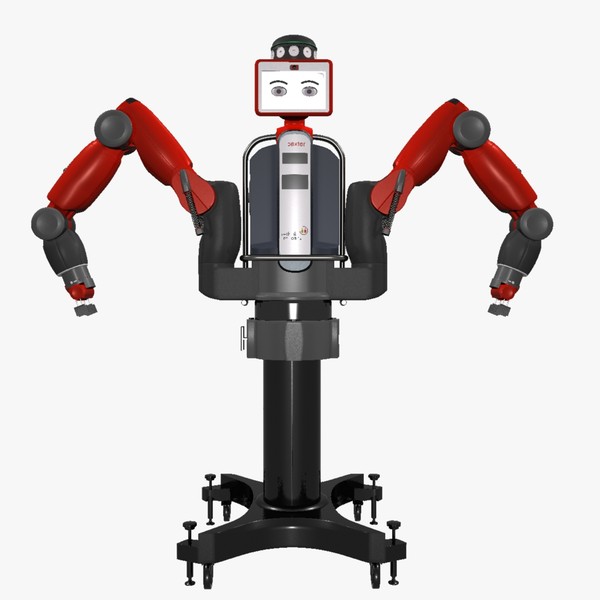

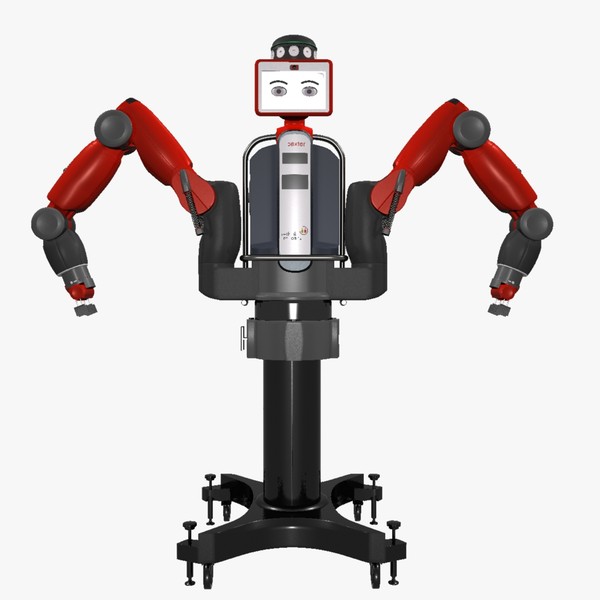

Artificial Intelligence has surpassed humans in countless areas, ranging from chess, when Kasparov was defeated by Deep Blue, to production, where robots like Baxter are capable of almost instantly learning and efficiently executing any task. Internet bots and software can far more efficiently manage data as well, and this is only the beginning.

Once AI surpasses humans, countless predictions suggest it will be capable to restructure itself and develop at a rate biological evolution by natural selection can not possibly match. It could solve all problems, heal all diseases, or wipe humanity off the face of the Earth, depending on its design.

Should we take the risk?

I welcome all your responses and will discuss them further, as well as propose a new debate in the next Question of the Future.

Here's an idea for discussion: computers think differently.

An AI might be told to get rid of cancer, and achieve this by wiping out life, and therefore all potential hosts. How can we teach a program human values?

By Gustavs Zilgalvis

Sources

Scientific American January 2016 "The Starfish Assassin"

Superintelligence: Paths, Dangers, Strategies - by Nick Bostrom

I think we should take the risk as it could open a lot of possibilities even though it could be dangerous. Nice article!

ReplyDeleteI believe that we should take the necessary risks towards establishing an AI.

ReplyDelete